CVPR 2020 Workshop onAdversarial Machine Learning in Computer Vision |

||

Seattle, Washington |

||

CVPR 2020 Workshop onAdversarial Machine Learning in Computer Vision |

||

Seattle, Washington |

||

Although computer vision models have achieved advanced performance on various recognition tasks in recent years, they are known to be vulnerable against adversarial examples. The existence of adversarial examples reveals that current computer vision models perform differently with the human vision system, and on the other hand provides opportunities for understanding and improving these models.

In this workshop, we will focus on recent research and future directions on adversarial machine learning in computer vision. We aim to bring experts from the computer vision, machine learning and security communities together to highlight the recent progress in this area, as well as discuss the benefits of integrating recent progress in adversarial machine learning into general computer vision tasks. Specifically, we seek to study adversarial machine learning not only for enhancing the model robustness against adversarial attacks, but also as a guide to diagnose/explain the limitation of current computer vision models as well as potential improving strategies. We hope this workshop can shed light on bridging the gap between the human vision system and computer vision systems, and chart out cross-community collaborations, including computer vision, machine learning and security communities.

Sidharth Gupta (University of Illinois at Urbana-Champaign); Parijat Dube (IBM Research); Ashish Verma (IBM Research)

Binghui Wang (Duke University); Xiaoyu Cao (Duke University); Jinyuan Jia (Duke University ); Neil Zhenqiang Gong (Duke University)

Tianfu Wu (NC State University)

Nataniel Ruiz (Boston University)

Jiawei Chen (Boston University)

Sravanti Addepalli (Indian Institute of Science)

Quentin Bouniot (CEA LIST)

Jiachen Sun (University of Michigan)

Kartik Gupta (Australian National University)

Ligong Han (Rutgers University)

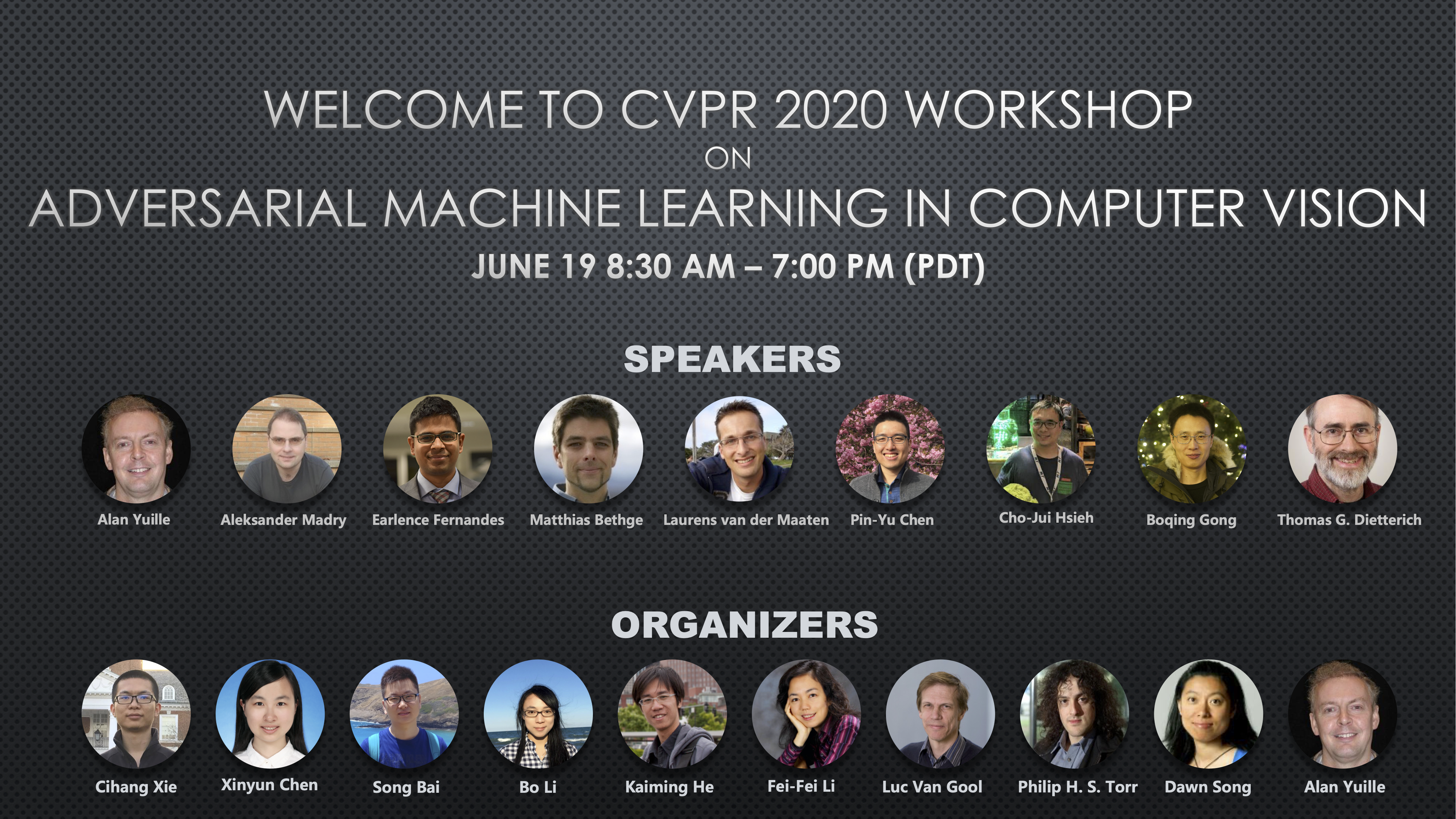

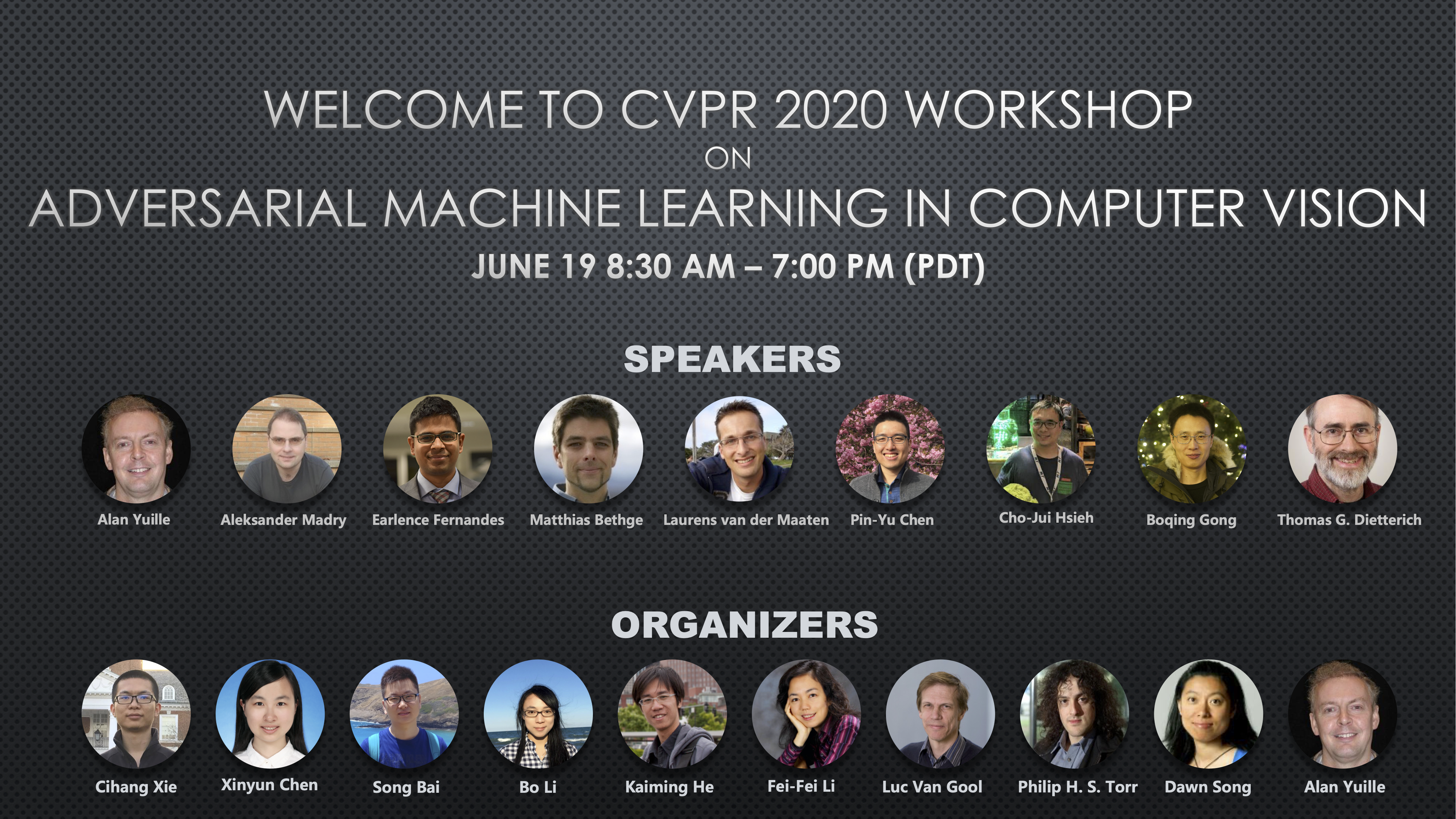

08:30 - 08:40 Opening Remark

08:40 - 09:10 Invited Talk 1: Alan Yuille - Defending Against Random Occluder Attacks

09:10 - 09:40 Invited Talk 2: Aleksander Madry - What Do Our Models Learn?

09:40 - 10:10 Invited Talk 3: Earlence Fernandes - Physical Attacks on Object Detectors

10:10 - 10:40 Invited Talk 4: Matthias Bethge - Testing Generalization

10:40 - 11:10 Panel Discussion I: Alan Yuille, Aleksander Madry, Earlence Fernandes and Matthias Bethge

11:10 - 13:00 Poster Session I

13:00 - 14:00 Lunch Break

14:00 - 14:30 Invited Talk 5: Laurens van der Maaten - Adversarial Robustness: The End of the Early Years

14:30 - 15:00 Invited Talk 6: Pin-Yu Chen - Bridging Mode Connectivity in Loss Landscapes and Adversarial Robustness

15:00 - 15:30 Invited Talk 7: Cho-Jui Hsieh - Adversarial Robustness of Discrete Machine Learning Models

15:30 - 16:00 Invited Talk 8: Boqing Gong - Towards Visual Recognition in the Wild: Long-Tailed Sources and Open Compound Targets

16:00 - 16:30 Invited Talk 9: Thomas G. Dietterich - Setting Alarm Thresholds for Anomaly Detection

16:30 - 17:00 Panel Discussion II: Laurens van der Maaten, Pin-Yu Chen, Cho-Jui Hsieh, Boqing Gong and Thomas G. Dietterich

17:00 - 18:50 Poster Session II

18:50 - 19:00 Closing Remark

Akshay Agarwal (IIIT Delhi); Mayank Vatsa (IIT Jodhpur); Richa Singh (IIIT-Delhi); Nalini Ratha (IBM)

Tianfu Wu (NC State University); Zekun Zhang (NC state university)

Binghui Wang (Duke University); Xiaoyu Cao (Duke University); Jinyuan Jia (Duke University ); Neil Zhenqiang Gong (Duke University)

Konda Reddy Mopuri (School of Informatics, University of Edinburgh); Vaisakh Shaj (University Of Lincoln); Venkatesh Babu Radhakrishnan (Indian Institute of Science)

Sidharth Gupta (University of Illinois at Urbana-Champaign); Parijat Dube (IBM Research); Ashish Verma (IBM Research)

Nataniel Ruiz (Boston University); Sarah Bargal (Boston University); Stan Sclaroff (Boston University)

Jiawei Chen (Boston University ); Janusz Konrad (Boston University); Prakash Ishwar (Boston University)

Aniruddha Saha (UMBC); Akshayvarun Subramanya (UMBC); Koninika Patil (UMBC); Hamed Pirsiavash (UMBC)

Jamie Hayes (University College London)

Loc Truong (Western Washington University); Chace Jones (Western Washington University); Nicole Nichols (PNNL); Andrew August (PNNL); Brian Hutchinson (Western Washington University); Brenda Praggastis (PNNL); Robert Jasper (PNNL); Aaron R Tuor (PNNL)

Akshayvarun Subramanya (UMBC); Vipin Pillai (UMBC); Hamed Pirsiavash (UMBC)

Jeremiah Rounds (PNNL); Addie Kingsland (PNNL; Michael Henry (PNNL); Kayla Duskin (PNNL)

Carlos M Ortiz Marrero (PNNL); Brett Jefferson (PNNL)

Sravanti Addepalli (Indian Institute of Science); Vivek B S (Indian Institute of Science); Arya Baburaj (Indian Institute of Science); Gaurang Sriramanan (Indian Institute of Science); Venkatesh Babu Radhakrishnan (Indian Institute of Science)

Quentin Bouniot (CEA LIST); Angélique Loesch (CEA LIST); Romaric Audigier (CEA LIST)

Philipp Benz (KAIST); Chaoning Zhang (KAIST); Tooba Imtiaz (KAIST); In So Kweon (KAIST, Korea)

Philipp Benz (KAIST); Chaoning Zhang (KAIST); Tooba Imtiaz (KAIST); In So Kweon (KAIST, Korea)

Jiachen Sun (University of Michigan); yulong cao (University of Michigan, Ann Arbor ); Qi Alfred Chen (UC Irvine); Zhuoqing Morley Mao (University of Michigan)

Kartik Gupta (Australian National University); Thalaiyasingam Ajanthan (ANU)

Robby S Costales (Columbia University); Chengzhi Mao (Columbia University); Raphael Norwitz (Nutanix); Bryan Kim (Stanford University); Junfeng Yang (Columbia University)

Philip Yao (University of Michigan); Andrew So (California State Polytechnic University at Pomona); Tingting Chen (California State Polytechnic University at Pomona); Hao Ji (California State Polytechnic University at Pomona)

Ligong Han (Rutgers University); Anastasis Stathopoulos (Rutgers University); Tao Xue (Rutgers University); Dimitris N. Metaxas (Rutgers)

Won Park (University of Michigan); Qi Alfred Chen (UC Irvine); Zhuoqing Morley Mao (University of Michigan)

Xinlei Pan (UC Berkeley); Yulong Cao (University of Michigan); Xindi Wu (Carnegie Mellon University ); Eric Zelikman (Stanford University); Chaowei Xiao (University of Michigan; Yanan Sui; Rudrasis Chakraborty (UC Berkeley/ICSI); Ronald Fearing (UC Berkeley)

Please contact Cihang Xie or Xinyun Chen if you have questions. The webpage template is by the courtesy of ICCV 2019 Tutorial on Interpretable Machine Learning for Computer Vision. Thank Yingwei Li for making this website.